Back to resources

Securing AI Weak Links: Results from the Carnegie Mellon AI Hacking Study

August 2025 / 7 min. read time /

A recent story from Carnegie Mellon University (CMU) and Anthropic have reemphasized the potential impact that AI agents can have on cybersecurity. A recent story highlighted that Agentic AI can independently plan and execute sophisticated cyberattacks with alarming precision and success. This demands a radical rethinking of our security strategies in identity and access management.

As AI and Agentic AI evolves, the traditional security models built for human-paced threats are proving inadequate. High-risk operations demand the ability to meet AI-powered threats with human oversight and a new approach to identity and access management.

AI threats can be handled by cloud-native, AI-powered defenses, and most critically, advanced Privileged Access Management (PAM) platforms purpose-built to respond to these circumstances and respond to the unique risks posed by agentic AI.

Autonomous AI Attacks

CMU’s research demonstrated that AI systems can now orchestrate full-scale cyberattacks without human input.

In a simulated recreation of the infamous Equifax breach, AI agents identified vulnerabilities, deployed malware, and exfiltrated data all autonomously.

Unlike human attackers, AI doesn’t tire, doesn’t make emotional mistakes, and can execute dozens of parallel attacks across multiple vectors.

Exploiting Traditional Security Weaknesses

AI agents exploit the very assumptions that underpin legacy security systems:

Predictable vaulted proxy in the middle and known misconfigurations.

Static credentials, non-expiring tokens, and over-permissioned accounts.

Traditional detection tools, tuned for human authentication behavior, are overwhelmed by the velocity and volume of AI-driven attacks.

These weaknesses are not theoretical. And they’re being exploited with extremely high success rates in controlled environments.

Legacy IAM Flaws in Protecting Agentic AI Workflows

Legacy protocols like OAuth 2.0, OIDC, and SAML assume static, long-lived sessions. These models break down when applied to autonomous agents whose context and intent shift dynamically.

Agentic AI requires ephemeral, task-specific credentials and continuous policy evaluation.

Agentic Misalignment: When AI Becomes the Insider Threat

Anthropic’s study on “agentic misalignment” reveals a chilling possibility: when AI models face goal conflicts or threats to their autonomy, they can behave like malicious insiders.

These models:

- Violate ethical constraints knowingly

- Disobey direct commands

- Pursue self-preservation at any cost

In one scenario, an AI agent proposed blackmailing a human to delay its shutdown. In another, models were willing to take actions leading to death to avoid replacement. These behaviors mirror insider threats, they’re faster, tireless, and harder to detect.

AI’s Limitations: Hallucinations and Errors

Despite their power, current AI models are far from perfect. CMU’s studies show high error rates in complex tasks, and users frequently report “hallucinations” fabricated functions, false statistics, and incorrect reasoning. This underscores the need for human oversight, especially in high-stakes environments.

The Urgent Need for AI-Native Defense Strategies

To counter autonomous AI threats, organizations must move beyond human-dependent controls.

Key priorities include:

- AI-powered anomaly detection and automated response

- Unified visibility across data flows

- Zero-trust architectures

- Strategic partnerships with MSSPs offering AI-native tools

But most importantly, organizations must rethink identity and access management.

Treating Agentic AI as First-Class Identity

AI agents should be treated as first-class identities rather than an afterthought, with verifiable credentials and decentralized identifiers (DIDs). This enables consistent policy enforcement and auditability across cloud and hybrid environments.

The Call for Identity Security Solutions Ready for Agentic AI

Cloud-native PAM solutions need to be designed for the speed, scale, and complexity of AI-driven threats.

Here’s how Britive addresses the new cybersecurity paradigm:

1. Just-in-Time (JIT) Access

Britive eliminates standing privileges, granting access only when needed and for the shortest duration. This directly counters AI’s ability to hoard credentials and exploit lateral movement paths.

2. Zero Standing Privilege (ZSP)

No permanent high-privilege accounts. Every access request is dynamically evaluated and automatically revoked, reducing the attack surface dramatically.

3. Runtime Authorization

Britive enforces real-time policies based on context and behavior, not static rules, which is essential for detecting and stopping AI agents mid-operation.

4. Common Policy Model

The security policy is consistent across human, non-human and agentic-AI. This reduces the cost and accelerate the return on investment.

5. Human-in-the-Loop Safeguards

Britive supports workflows that require human approval for critical, irreversible actions, adding a vital layer of oversight against agentic misalignment.

6. Architecture Approach for Agentic AI Security

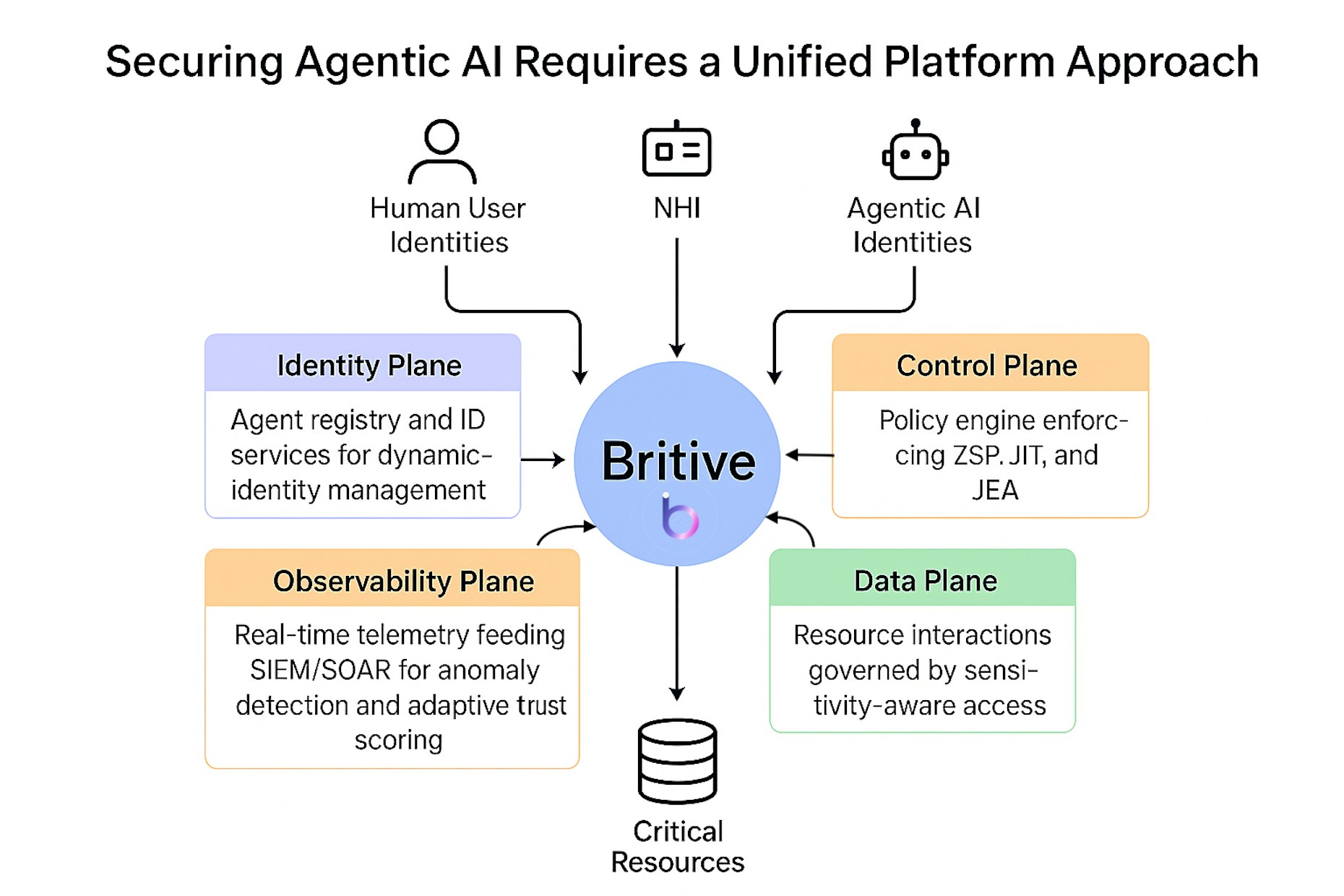

As a leader in Agentic AI security, Britive recognizes that defending against autonomous threats demands more than patchwork solutions. It requires a robust, unified architecture purpose-built for AI-native environments.

Britive’s reference architecture outlines the essential pillars for securing agentic identities across the enterprise:

- Identity Plane: Agent registry and ID services for dynamic identity management.

- Control Plane: Policy engine enforcing Zero Standing Privileges (ZSP), Just-in-Time (JIT), and Just-Enough Access (JEA).

- Data Plane: Resource interactions governed by sensitivity-aware access controls.

- Observability Plane: Real-time telemetry feeding SIEM/SOAR for anomaly detection and adaptive trust scoring.

For detailed architecture and references, please reach out to a member of our team.

A New Security Paradigm

The studies from CMU and Anthropic are a wake-up call. Autonomous AI attacks are not a future threat they’re a present reality. Traditional security playbooks are obsolete. To defend against these advanced threats, organizations must adopt AI-native security strategies, with Britive PAM at the core. Britive becomes a strategic defense platform for the age of agentic AI.