Back to resources

Turning AI Security Frameworks into Practical Controls

December 2025 / 8 min. read /

Every major cloud and data platform now has a framework for securing AI. Google has SAIF, AWS has CAF-AI and Generative AI Best Practices; Microsoft has the Secure AI Risk Assessment and Responsible AI Standard, and Databricks recently introduced the AI Security Framework (DASF).

Add to that NIST AI RMF, CSA’s AI Security Alliance, and OWASP’s AI Security Guide, and you get a broad landscape of AI governance blueprints.

In my view, Google SAIF is one of the most holistic frameworks available today. It captures the full lifecycle of AI development, deployment, and protection, bridging the gap between policy and technical enforcement. That said, other frameworks such as AWS CAF-AI, Microsoft Secure AI, and Databricks DASF each add valuable dimensions, from operational governance to data integrity and responsible AI principles.

Because CSP frameworks are technology-agnostic, enterprises should combine their strengths instead of using them in isolation. This ensures consistent coverage and adaptability across multi-cloud and hybrid environments.

Frameworks should be used as roadmaps for best practices and paired with operational controls to truly secure AI systems.

How Enterprises Can Make Frameworks Actionable

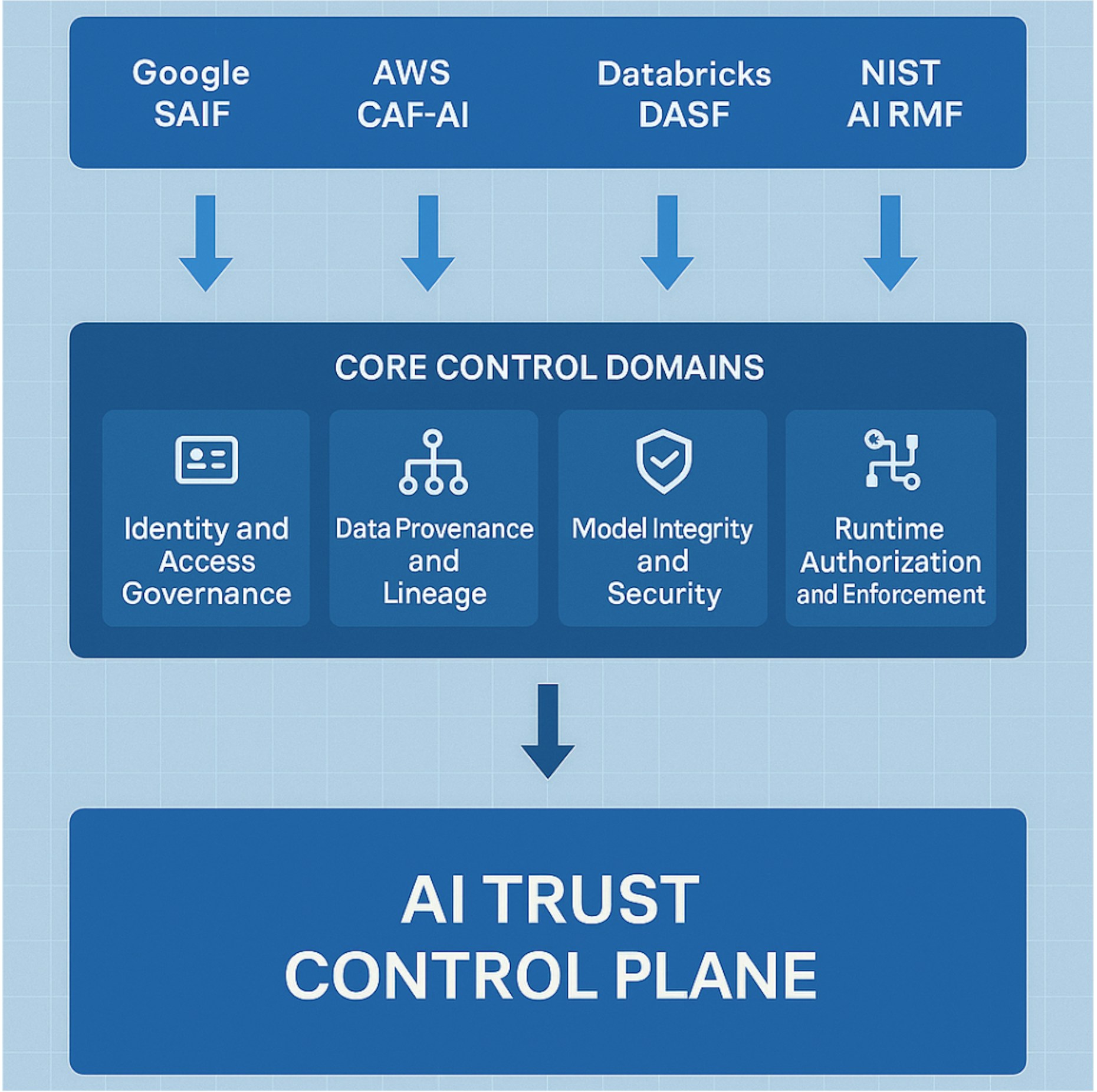

Most organizations stop at policy and governance. AI risk, however, exists in runtime where data, models, and agents interact dynamically. Enterprises should consider implementing an AI Trust Control Plane to convert identity, data, and model governance into enforceable runtime controls.

The focus should shift from compliance reporting to achieving true operational trust.

Mapping Frameworks to the AI Lifecycle

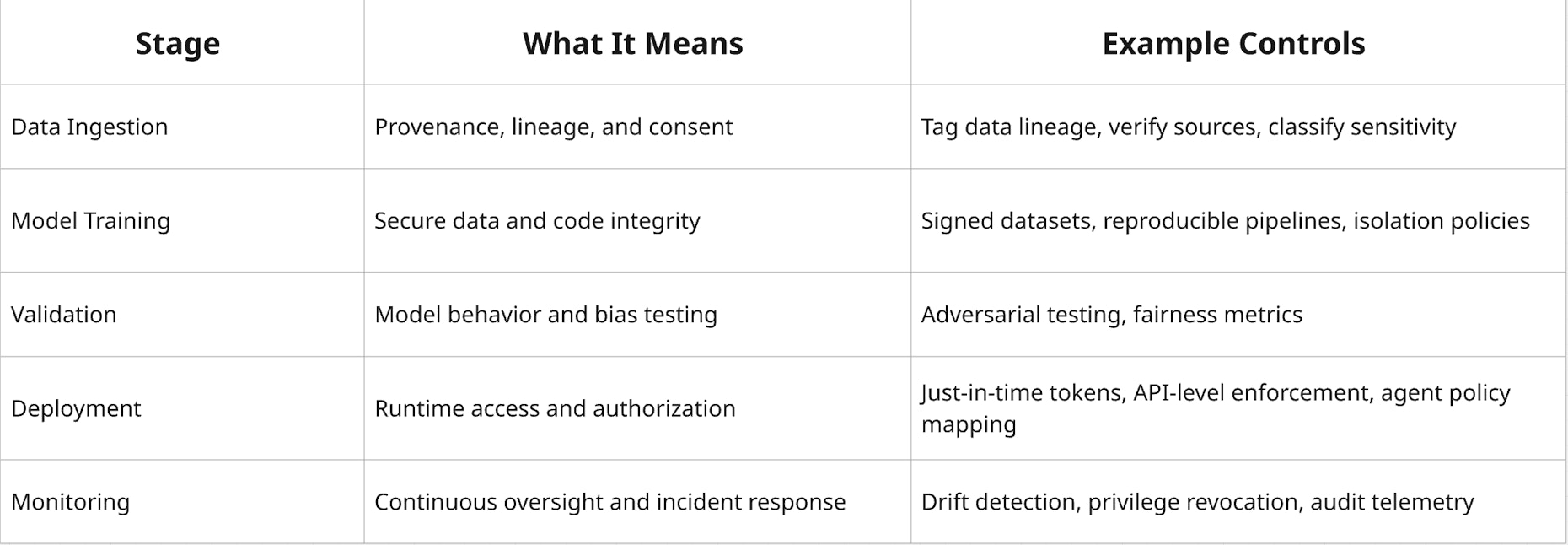

All major frameworks such as SAIF, CAF-AI, DASF, and NIST RMF align across a common lifecycle.

When mapped to the AI lifecycle, frameworks move from theory to control architecture.

Core Control Domains That Make Frameworks Work

Enterprises can operationalize these frameworks through five recurring control pillars supported by an evolving ecosystem of security platforms.

- Identity and Access Governance

This is the foundation of every AI control plane. The standard should be applying Zero Standing Privilege (ZSP) for both humans and agents by using runtime just-in-time authorization combined with identity federation through commonly used IdPs such as Okta, Ping, Duo, or Entra. Lifecycle governance solutions such as SailPoint can be used to manage identity lifecycles and entitlements across applications and systems. - Data Provenance and Lineage

Trace data from origin to model. As an example, leveraging Databricks Unity Catalog, Collibra, or Alation to govern data movement, and extend controls through Britive to enforce access only when ownership and provenance are validated. - Model Integrity and Security

Detect and contain vulnerabilities early. Use OWASP AI Security guidance along with AI security platforms such as ProtectAI, TrojAI, or Aim Security to perform model scanning, prompt-injection testing, supply-chain validation, and adversarial risk detection. When anomalies or high-risk model behaviors are detected, integrate with a runtime authorization platform to restrict or revoke access through just-in-time controls. - Runtime Authorization and Enforcement

Extend Zero Trust security principles to all AI workloads. Use a cloud-native PAM solution for policy-driven, ephemeral access across AWS Bedrock, Google Vertex AI, Snowflake, and Databricks. Complement with visibility tools such as Wiz or Prisma Cloud for posture-based access tied to configuration and runtime state. - Governance and Oversight

Create a unified view of accountability. Form an AI Risk Council led by the CISO to align security, compliance, and engineering. Reference NIST AI RMF, Microsoft Responsible AI, and ISO 23894 while using telemetry to show measurable Zero Trust maturity and compliance.

Should Enterprises Combine Frameworks or Pick One?

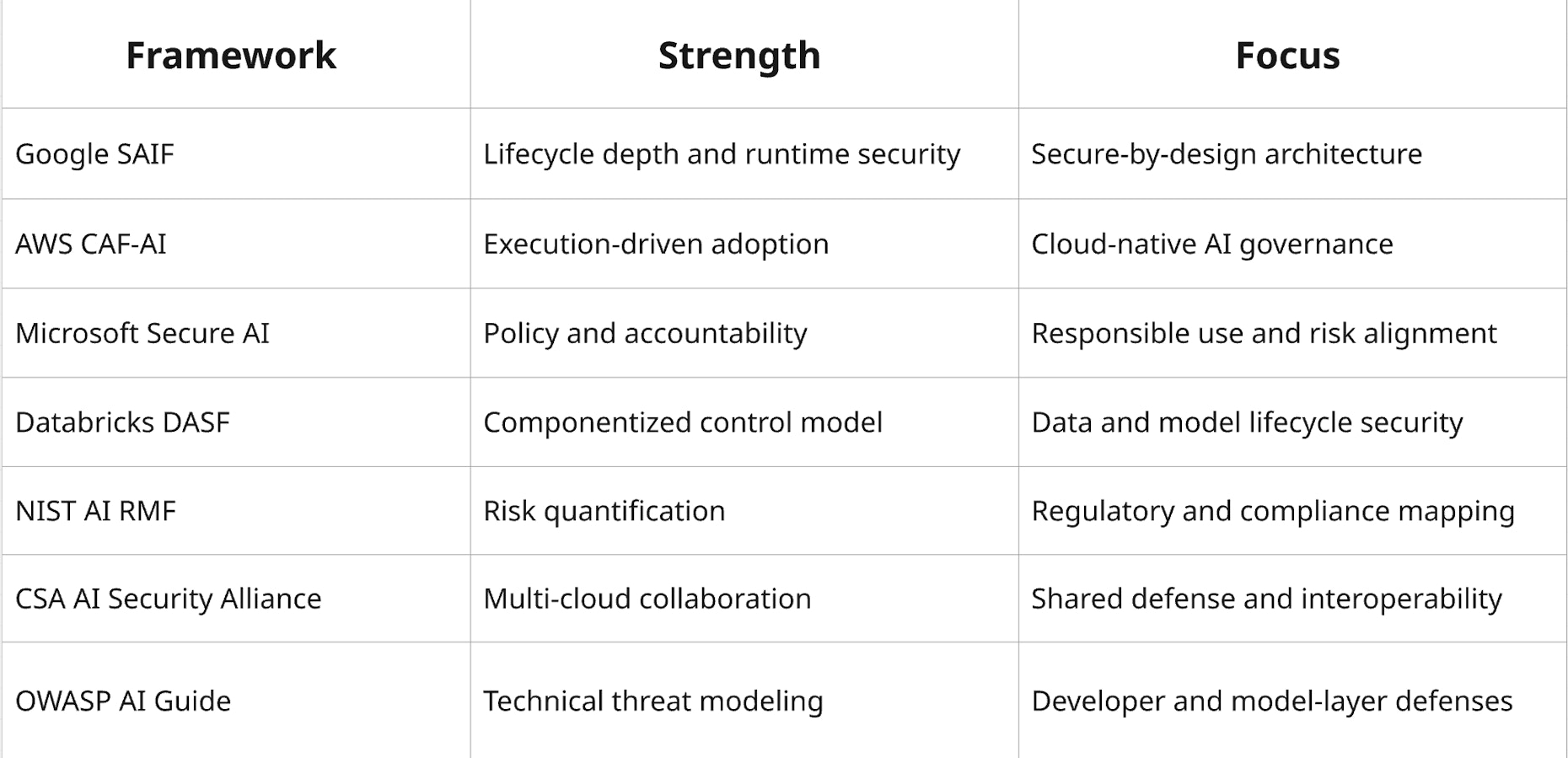

Strong programs should integrate guidance from multiple industry frameworks, such as SAIF for lifecycle structure, CAF-AI for operational alignment, NIST for governance, and DASF for data-platform control, while remaining open to others as needed.

Below are the strengths and focuses for each framework, so organizations can choose which frameworks to follow according to their security priorities:

Ownership of AI Security

AI security succeeds when it is cross-functional.

- CISO and Security teams manage governance and control mapping.

- Engineering teams drive runtime enforcement and automation.

- Data and AI teams ensure provenance and ethical use.

- Legal and Compliance teams oversee documentation and audit alignment.

Cross-functional ownership turns frameworks into measurable outcomes, allowing teams to drive distinct priorities forward with a unified program.

Establishing Non-Negotiable Security Anchors

Many enterprises find it helpful to define a small set of foundational pillars or anchor points that are non-negotiable. These serve as the guardrails that every design decision, process, and vendor solution must align with before measurable KPIs come into play.

While frameworks provide structure and controls offer implementation guidance, anchor points define the intent of your security posture. They ensure every action aligns with core principles that should never be compromised.

Examples of non-negotiable anchors might include:

- Agents and services must never have static or over-permissive access to sensitive data or infrastructure.

- All privileged access must be time-bound, contextual, and auditable.

- Data movement and model training pipelines must maintain verified lineage and provenance.

By defining two or three anchor points early, enterprises create a foundation that guides both architecture and vendor selection. This ensures that tools, processes, and frameworks are all evaluated through the same lens of alignment to these principles.

These anchors become part of the organization’s security DNA, allowing flexibility in execution while maintaining consistency in protection.

Measuring Progress

Progress must be quantifiable. For example:

- Percentage of AI identities under just-in-time access

- Number of datasets with verified provenance

- Mean time to revoke privileged access

- Percentage of models with signed lineage metadata

- AI Trust Score or Risk Maturity Index

These metrics turn AI security from concept into measurable performance.

The Path Forward

No single vendor or framework can provide the full picture. Google SAIF set the lifecycle standard, AWS CAF-AI defined operational governance; Microsoft emphasized responsible AI, and Databricks connected data and model control.

Enterprises need to take a hybrid approach, integrating the strengths of each to form a comprehensive AI Trust Control Plane that enforces Zero Trust for humans, services, and AI agents alike.

Security frameworks show the direction, anchor points keep us aligned to core principles, and control planes turn that intent into real, measurable implementation.